KPlatform Deployment

Cómo implementar KPlatform

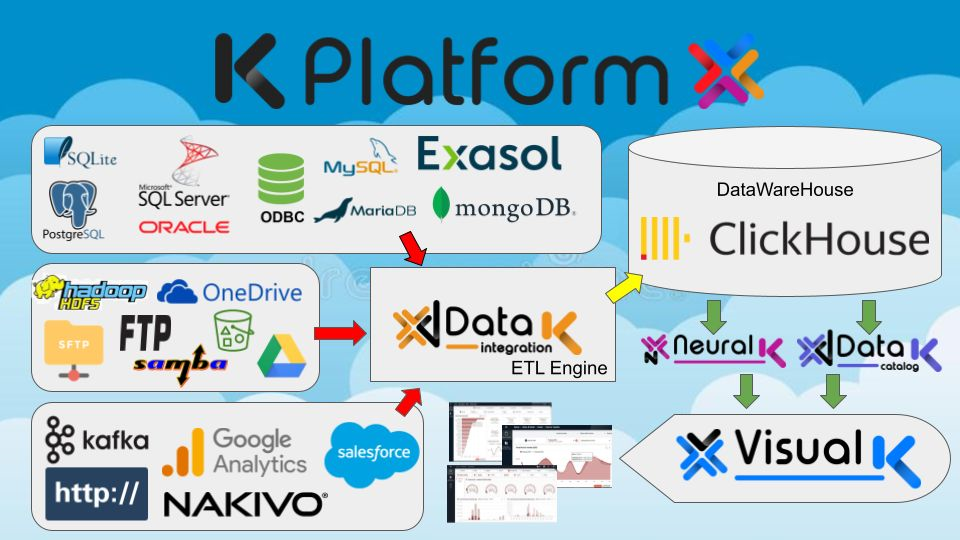

KPlatform desarrollado da la empresa DataLife es una plataforma escrita en PHP (Laravel) del lado del servidor y Javascript (Angular) del lado del cliente.

Se distribuye a través de imágenes de Docker y, por lo tanto, es necesario instalar Docker o Podman en el sistema host.

La base de datos de soporte de la plataforma puede ser:

- Postgres

- MySQL o MariaDB

- SQLite

Todo el sistema también utiliza Airflow para la programación y ejecución de tareas de DataK.

La KPlatform consta de 5 servicios:

- init:

servicio de instalación y actualización que se lanza solo al inicio y se cierra después de su ejecución - www:

servicio que atiende conexiones http y https que le permite ejecutar scripts php (se puede dividir en 2 servicios www-fpm y fpm) - websocket:

servicio de escucha en el puerto 6001 del servidor que permite conexiones websocket - queue:

Servicio que permite ejecutar trabajos asíncronos en segundo plano - scheduler:

Servicio de programación de tareas programadas

Normalmente, docker compose se usa para fusionar los diversos servicios.

Primero debe iniciar sesión en el registro para poder descargar las imágenes.

docker login 6a776i61.gra7.container-registry.ovh.net

username: ***

password: ***

Una vez iniciada la sesión, se deben preparar un mínimo de 3 archivos

- docker-compose.yml

donde se definen los servicios - .env.k-platform

donde residen las variables de entorno que se utilizarán en los distintos contenedores - restart.sh

donde estan los comandos para iniciar los contenedores

docker-compose.yml

version: '3'

services:

init:

image: ${REGISTRY}kp:${VERSION_CORESTACK}

container_name: ${HOST_PREFIX:-kp}-init

user: "${CORESTACK_UID:-1001}:${CORESTACK_GID:-0}"

depends_on:

- ${APP_BACKEND_DATABASE}

environment:

CONTAINER_ROLE: init

env_file:

- .env.k-platform

volumes:

- ${APP_VOLUME:-app}:/var/www

www:

image: ${REGISTRY}kp:${VERSION_CORESTACK}

restart: unless-stopped

container_name: ${HOST_PREFIX:-kp}-www

user: "${CORESTACK_UID:-1001}:${CORESTACK_GID:-0}"

healthcheck:

test: ["CMD", "curl", "-L", "${HOST_PREFIX:-kp}-www:8080/api/healthcheck"]

timeout: 5s

retries: 5

depends_on:

- ${APP_BACKEND_DATABASE}

ports:

- ${PORT:-80}:${HTTPD_PORT:-8080}

- ${SSL_PORT:-443}:${HTTPD_SSL_PORT:-8443}

environment:

CONTAINER_ROLE: www

env_file:

- .env.k-platform

volumes:

- ${APP_VOLUME:-app}:/var/www

websocket:

image: ${REGISTRY}kp:${VERSION_CORESTACK}

restart: unless-stopped

container_name: ${HOST_PREFIX:-kp}-websocket

user: "${CORESTACK_UID:-1001}:${CORESTACK_GID:-0}"

depends_on:

- www

ports:

- ${WEBSOCKET_PORT:-6001}:${PUSHER_APP_PORT:-6001}

environment:

CONTAINER_ROLE: websocket

env_file:

- .env.k-platform

volumes:

- ${APP_VOLUME:-app}:/var/www

queue:

image: ${REGISTRY}kp:${VERSION_CORESTACK}

restart: unless-stopped

user: "${CORESTACK_UID:-1001}:${CORESTACK_GID:-0}"

depends_on:

- websocket

environment:

CONTAINER_ROLE: queue

env_file:

- .env.k-platform

volumes:

- ${APP_VOLUME:-app}:/var/www

scheduler:

image: ${REGISTRY}kp:${VERSION_CORESTACK}

restart: unless-stopped

container_name: ${HOST_PREFIX:-kp}-scheduler

user: "${CORESTACK_UID:-1001}:${CORESTACK_GID:-0}"

depends_on:

- queue

environment:

CONTAINER_ROLE: scheduler

env_file:

- .env.k-platform

volumes:

- ${APP_VOLUME:-app}:/var/www

volumes:

app: { }.env.k-platform

APP_NAME=YourAppName

VERSION_CORESTACK=4.0.0

CONTAINER=docker

HOST_PREFIX=kp

REGISTRY=6a776i61.gra7.container-registry.ovh.net/library/

HOST=yourdomain.com

TZ=Europe/Rome

#APP_SSL=1

#APP_HTTP_REDIRECT_TO_HTTPS=1

APP_BACKEND_DATABASE=postgres

APP_VOLUME=app

APP_URL=https://yourdomain.com/api

DB_CONNECTION=pgsql

DB_HOST=YourDatabaseHost

APP_TIMEZONE=Europe/Rome

APP_LOCALE=en

DB_PORT=5432

DB_PREFIX=bi_

DB_USERNAME=postgres

DB_SCHEMA=public

XDB_PASSWORD=YourDatabasePassword

DB_PASSWORD_TYPE=crypt

DB_CHARSET=UTF8

HTTPD_PORT=8080

HTTPD_SSL_PORT=8443

PORT=80

SSL_PORT=443

USER_EMAIL=admin@example.com

USER_FIRST_NAME=Admin

USER_LAST_NAME=Admin

USER_USERNAME=admin

USER_PASSWORD=admin

USER_LANGUAGE=it

LOCAL_URL=http://127.0.0.1

PUSHER_APP_HOST=kp-websocket

PUSHER_APP_PORT=6001

PUSHER_APP_ID=kp-app

PUSHER_APP_KEY=kp-key

PUSHER_APP_SECRET=kp-secret

#PUSHER_APP_SCHEME=https

PUSHER_APP_SCHEME=http

#PUSHER_APP_ENCRYPTED=1

PUSHER_APP_CLUSTER=

WEBSOCKET_PORT=6001

#LARAVEL_WEBSOCKETS_SSL_LOCAL_CERT=/var/www/corestack.crt

#LARAVEL_WEBSOCKETS_SSL_LOCAL_PK=/var/www/corestack.key

CORESTACK_UID=1001

CORESTACK_GID=0

APP_QUEUES=5

restart.sh

docker-compose down --remove-orphans

source $PWD/.env.k-platform

docker-compose up --no-recreate init

init_exit_code=$(docker inspect --format='{{.State.ExitCode}}' ${HOST_PREFIX}-init)

if [ $init_exit_code != "0" ]; then

echo "init start failed"

exit 1

fi

docker-compose up -d --no-recreate www websocket queue scheduler

docker-compose up -d --no-recreate --scale queue=${APP_QUEUES:-1} queue

Para descargar las imágenes es necesario ejecutar el comando "pull"

docker-compose pull

finalmente, para lanzar los contenedores, cambie los permisos al script .sh y ejecute restart.sh

chmod +x restart.sh

./restart.sh

El nombre de usuario y la contraseña del usuario administrador están en la plataforma .env.k

USER_USERNAME=admin

USER_PASSWORD=admin

Cómo activar el DataK

Para activar el DataK es necesario crear 4 nuevos servicios

- airflow-init

- airdlw-webserver

- airflow-scheduler

- airflow-scheduler-loop

Por lo tanto, es necesario modificar docker-compose.yml agregando los siguientes servicios:

...

airflow-init:

container_name: ${HOST_PREFIX:-cs}-airflow-init

environment:

CONTAINER_ROLE: init

image: ${REGISTRY}kp-airflow:${VERSION_AIRFLOW}

env_file:

- .env.airflow

volumes:

- ${AIRFLOW_DAGS_VOLUME:-airflow-dags}:/opt/airflow/dags

- ${AIRFLOW_LOGS_VOLUME:-airflow-logs}:/opt/airflow/logs

- ${AIRFLOW_DATA_VOLUME:-airflow-data}:/opt/airflow/data

- ${AIRFLOW_CONF_VOLUME:-airflow-conf}:/opt/airflow/conf

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-0}"

depends_on:

- ${AIRFLOW_BACKEND_DATABASE}

airflow-webserver:

container_name: ${HOST_PREFIX:-cs}-airflow

restart: unless-stopped

environment:

CONTAINER_ROLE: webserver

image: ${REGISTRY}kp-airflow:${VERSION_AIRFLOW}

env_file:

- .env.airflow

volumes:

- ${AIRFLOW_DAGS_VOLUME:-airflow-dags}:/opt/airflow/dags

- ${AIRFLOW_LOGS_VOLUME:-airflow-logs}:/opt/airflow/logs

- ${AIRFLOW_DATA_VOLUME:-airflow-data}:/opt/airflow/data

- ${AIRFLOW_CONF_VOLUME:-airflow-conf}:/opt/airflow/conf

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-0}"

depends_on:

- ${AIRFLOW_BACKEND_DATABASE}

airflow-scheduler:

container_name: ${HOST_PREFIX}-airflow-scheduler

restart: unless-stopped

environment:

CONTAINER_ROLE: scheduler

image: ${REGISTRY}kp-airflow:${VERSION_AIRFLOW}

env_file:

- .env.airflow

volumes:

- ${AIRFLOW_DAGS_VOLUME:-airflow-dags}:/opt/airflow/dags

- ${AIRFLOW_LOGS_VOLUME:-airflow-logs}:/opt/airflow/logs

- ${AIRFLOW_DATA_VOLUME:-airflow-data}:/opt/airflow/data

- ${AIRFLOW_CONF_VOLUME:-airflow-conf}:/opt/airflow/conf

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-0}"

depends_on:

- ${AIRFLOW_BACKEND_DATABASE}

airflow-scheduler-loop:

container_name: ${HOST_PREFIX:-cs}-airflow-scheduler-loop

restart: unless-stopped

environment:

CONTAINER_ROLE: scheduler-loop

image: ${REGISTRY}kp-airflow:${VERSION_AIRFLOW}

env_file:

- .env.airflow

volumes:

- ${AIRFLOW_DAGS_VOLUME:-airflow-dags}:/opt/airflow/dags

- ${AIRFLOW_LOGS_VOLUME:-airflow-logs}:/opt/airflow/logs

- ${AIRFLOW_DATA_VOLUME:-airflow-data}:/opt/airflow/data

- ${AIRFLOW_CONF_VOLUME:-airflow-conf}:/opt/airflow/conf

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-0}"

depends_on:

- ${AIRFLOW_BACKEND_DATABASE}

...

volumes:

airflow-dags: { }

airflow-logs: { }

airflow-data: { }

airflow-conf: { }También es necesario crear un nuevo archivo llamado .env.airflow como este:

## AIRFLOW

VERSION_AIRFLOW=4.0.0

AIRFLOW_PORT=8080

AIRFLOW_HOME=/opt/airflow

AIRFLOW_EMAIL=admin@example.com

AIRFLOW_USER=root

AIRFLOW_PASSWORD=YourAirflowPassword

AIRFLOW_DATABASE=airflow

#AIRFLOW_SSL=0

LANG=en_US.UTF-8

LC_ALL=en_US.UTF-8

AIRFLOW_BACKEND_DATABASE=postgres

AIRFLOW_DAGS_VOLUME=airflow-dags

AIRFLOW_LOGS_VOLUME=airflow-logs

AIRFLOW_DATA_VOLUME=airflow-data

AIRFLOW_CONF_VOLUME=airflow-conf

HOST_PREFIX=kp

REGISTRY=6a776i61.gra7.container-registry.ovh.net/library/

AIRFLOW_UID=50000

AIRFLOW_GID=0

HOST=YourDatabaseHost

XDB_PASSWORD=YourDatabasePassword

DB_USERNAME=postgres

DB_CONNECTION=pgsql

DB_PORT=5432

DB_HOST=YourDatabaseHost

TZ=Europe/Rome

## PARA LE CREACION DE LA BASE DE DATOS

#POSTGRESQL_HOST=YourDatabaseHost

#POSTGRESQL_PORT=5432

#POSTGRESQL_USER=postgres

#POSTGRESQL_PASS=YourDatabasePassword

Las siguientes líneas deben agregarse al archivo restart.sh

...

docker-compose up --no-recreate airflow-init

airflow_init_exit_code=$(docker inspect --format='{{.State.ExitCode}}' ${HOST_PREFIX}-airflow-init)

if [ $airflow_init_exit_code != "0" ]; then

echo "airflow-init start failed"

exit 1

fi

docker-compose up -d --no-recreate airflow-webserver airflow-scheduler airflow-scheduler-loop

Para instalar Docker y Docker Compose consulte el siguiente artículo:

https://www.simonecosci.com/2023/01/31/install-docker-and-docker-compose-on-ubuntu-22-04/